Facebook Relay: An Evil And/Or Incompetent Attack On REST

Let’s play it safe and assume the answer is both.

Facebook’s on an evil mission. It wants to convince you that the web is broken. So Facebook recently introduced Relay. To explain why you “need” Relay instead of REST, they made a series of claims about why REST is broken.

These claims are false.

It’s not that everybody who works at Facebook is necessarily evil. There are good reasons to assume that at least some folks at Facebook simply do not understand REST. But Facebook’s systematically attacking the foundations of the Open Web with embrace-and-extend tactics. Whether Facebook’s acting out of malice or foolishness, its criticisms of REST are part of that attack. Done correctly, REST is intrinsic to HTTP, and therefore fundamental to the web. And the things Facebook says about REST simply are not true.

“Complicated Object Graphs”

Here’s their first claim:

First, a REST endpoint can return whatever you want. If you have a complex object graph, there is nothing about REST which prevents you from returning it. If you only want a subset of that graph, make an endpoint around that subset.

Second, HTTP can fire network requests in parallel. That’s been possible for years. There is some overhead with each request, but it’s unlikely that’s your bottleneck, especially if we’re talking about large object graphs. And to the extent that any of this is a problem, HTTP/2 is already solving it.

You want to avoid large object graphs anyway, for two reasons. Returning coarse-gained chunks of data tends to work against effective caching strategies, whether you’re using REST, Relay, or anything else. You have to cache the whole chunk or nothing. It’s entirely possible that an API using fine-grained resources will outperform a course-grained one, because its focus isn’t to optimize making requests, but rather to avoid them entirely. (Or, failing that, to avoid sending back a bandwidth-consuming response.)

You also want to avoid complicated object graphs because complicated object graphs are complicated. If you’re requesting some subset of a complicated object graph, and constantly re-negotiating which particular subset, then you have a problem which has nothing to do with REST. You’ve failed to identify the abstractions your code needs.

Facebook probably runs into this problem a lot. This is a guess, but their complex object graphs are probably optimized to represent their enormous social network, and all the creepy metadata they track. But gracefully correlating your data with your abstractions is one of the oldest, nastiest problems in software. That’s not a problem to solve at the level of network architecture.

API Versioning

Returning to the Relay blog post:

Invariably fields and additional data are added to REST endpoints as the system requirements change. However, old clients also receive this additional data as well, because the data fetching specification is encoded on the server rather than the client. As result, these payloads tend to grow over time for all clients. When this becomes a problem for a system, one solution is to overlay a versioning system onto the REST endpoints. Versioning also complicates a server, and results in code duplication, spaghetti code, or a sophisticated, hand-rolled infrastructure to manage it. Another solution to limit over-fetching is to provide multiple views – such as “compact” vs “full” – of the same REST endpoint, however this coarse granularity often does not offer adequate flexibility.

Literally every single one of these problems has nothing to do with HTTP or REST. HTTP has an elegant solution to the versioning problem, known as content negotiation.

Most alleged “REST” implementations underuse content negotiation, and underestimate the degree to which REST is intertwined with HTTP. But REST comes from Roy Fielding, a principal author of the HTTP spec. It’s not a coincidence.

If you don’t understand both REST and HTTP, you don’t understand either REST or HTTP. Because REST was in fact invented as a way to explain HTTP, during the first dot-com boom, to vendors whose proposed extensions didn’t fit HTTP’s philosophyAs described in sections 4.2 and 4.3 of Fielding’s dissertation, on pages 71 through 74..

To say that you’re using a REST API implies that you’re using features like content negotiation, and that you know what they’re for. This is one reason so many so-called “REST” implementations fail at even being REST implementations in the first place.

Over-fetching is easy to solve this way. Update the version attribute of your content type, and old clients will still get only the data they need.

Facebook also seems to be unaware here that you can compress responses. Most of the time, over-fetching doesn’t matter because the difference in size, once compressed, is negligible. There are always edge cases, and you should optimize them when necessary, but there’s no reason to build your entire architecture around them.

As to versioning, if the semantics of your API ever change, you’ll need it. Relay can’t solve that problem; Facebook’s making it sound like versioning is an artifact of REST, but versioning’s inherent to building software. Further, the idea that it results in “code duplication, spaghetti code, or a sophisticated hand-rolled infrastructure”—this is just all-out FUD. Sure, it can result in those things, but so can literally everything you build.

HTTP Has A Type System

Facebook’s next imaginary problem is utterly trivial to solve if you use content types correctly:

REST endpoints are usually weakly-typed and lack machine-readable metadata. While there is much debate about the merits of strong- versus weak-typing in distributed systems, we believe in strong typing because of the correctness guarantees and tooling opportunities it provides. Developer [sic] deal with systems that lack this metadata by inspecting frequently out-of-date documentation and then writing code against the documentation.

The correct way to version APIs is to use content types. And, although many people do not realize this, HTTP’s content types are a type system.

To quote one of our own blog posts on the topic:

use HTTP’s built-in type system, via standards like JSON Schema and libraries like JSCK. Your web server can guarantee that any incoming data matches its intended type before it ever gets to the application layer. And that means your application code has less clutter and simpler logic.

JSON Schema expands the type-checking which is already built into content types. JSCK is our own fast JSON Schema validator. If you use content types correctly, and also use JSON Schema with JSCK, you get strong typing over HTTP.

And, although JSON Schema is not part of HTTP proper, content types themselves are an intrinsic part of HTTP, and have been for a very long time. If you’ve got a protocol with a built-in type system, and you complain about it not having a type system, that’s just an indicator of your own ignorance.

Similarly, just because you claim your API is REST-based doesn’t make it true. Both REST and HTTP emphasize content negotiation and thus support strongly-typed interfaces.

A major design goal for HTTP and the REST architectural style is a thing called loose coupling. The entire point of loose coupling is to avoid exactly the problem described here:

Developer [sic] deal with systems that lack this metadata by inspecting frequently out-of-date documentation and then writing code against the documentation.

Okay, yeah, so don’t do that. Use custom content types with JSON Schema, and use JSON Schema to provide those types directly from within the API.

Ever notice how the web is constantly adding new image, audio, and video formats, without everything breaking all the time? That’s content negotiation working. With HTTP. Because HTTP already has a type system built in.

Move Fast And Break Things Which You Never Understood In The First Place

If you implement REST, you use content negotiation, and thereby eliminate versioning and typing issues. And, if you implement REST, you make available the endpoints you need, using parallel requests, caching, and compression to optimize your network traffic. Long story short, the major flaw with REST, according to Facebook, is that it doesn’t implement REST.

Facebook’s “move fast and break things” philosophy hurts its credibility here. If you’re too busy moving fast and breaking things to find out how REST works, you might settle for an incorrect implementation.

But if you encounter problems with a REST implementation, and you knew going in that you weren’t going to bother to fully implement REST, or even to find out how it works, then the problems with your implementation don’t necessarily have anything to do with REST.

To be fair, Facebook acknowledged this, sort of:

REST, an acronym for Representational State Transfer, is an architectural style rather than a formal protocol. There is actually much debate about what exactly REST is and is not. We wish to avoid such debates. We are interested in the typical attributes of systems that self-identify as REST, rather than systems which are formally REST.

But here’s a paraphrase: “we don’t want to get into the issue of what REST is or isn’t, we’re just going to criticize systems which call themselves REST systems, and then criticize these systems for their failure to employ criticially important features of REST, and then we’ll say that this proves REST sucks.”

It does not prove any such thing. All it proves is that if you use an architectural style without bothering to learn how it works, you’ll probably run into trouble. But nobody really needed Facebook to prove that.

Facebook next quotes Roy Fielding, while arguing that REST is poorly-suited for internal APIs:

Many of these attributes are linked to the fact that “REST is intended for long-lived network-based applications that span multiple organizations” according to its inventor. This is not a requirement for APIs that serve a client app built within the same organization.

There is, again, intellectual dishonesty here. “If a system calls itself REST, but Roy Fielding would disagree, we’re still going to call it REST anyway. But, when it makes us look good, suddenly Fielding’s words will matter.” You can’t have your cake and eat it too.

Also, even a small startup may want other developers to have access to their API. And some organizations are large enough that they effectively have “multiple organizations” within them. Are we supposed to believe Facebook is not such an organization?

Facebook follows its Fielding quote with this:

Nearly all externally facing REST APIs we know of trend or end up in these non-ideal states, as well as nearly all internal REST APIs.

In other words: “nearly all APIs which claim to implement REST do not actually do so.” This is news? To whom?

You get these “non-ideal states” because most APIs claiming to be REST-based don’t make proper use of HTTP features like content negotiation and caching. That doesn’t mean there is a problem with REST. That just means developers need to make better use of HTTP.

In other words, your REST APIs suck because you don’t know HTTP.

Grow Up And Learn REST Already, Kids

You can answer every complaint Facebook has with “just use REST correctly.”

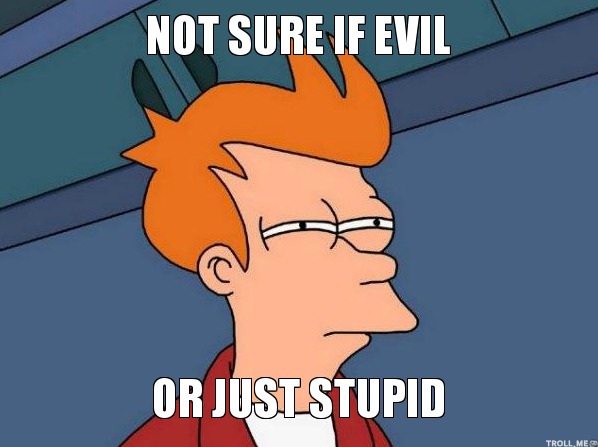

Some people don’t want to hear that. You see a lot of this:

But you never see it from people who actually know how Robin would have finished his sentence. Batman isn’t just the bad guy in the above picture — he’s also the child. It takes effort to figure out how REST and HTTP work, and you can have a fun career making bad software for misguided venture capitalists — or, indeed, successful social networks — without once ever putting forth any of that effort.

If that’s what you want to do, that’s your choice. But you can’t say “it doesn’t matter what you call it as long as it works” on the one hand, and then say, “look, it isn’t working, REST sucks” on the other.

Either the lobotomized, pop-culture version of REST works or it doesn’t. If it isn’t working — and it typically doesn’t — maybe we should listen to all those people saying, “hey, that isn’t REST.” People like Roy Fielding, perhaps. Who invented and defined the concept.

But again, Facebook chose something else. They ignored Fielding’s work, and then quoted him out of context. And then they set out to reinvent Fielding’s wheel, badly. Which is not that surprising if you know a little about Facebook’s culture.

What’s more glamorous, in a company which proclaims “move fast and break things”? Should you do your homework, and be the only person who actually knows what REST is? Or should you move fast, break HTTP, invent some new thing, and get a ton of attention? After all, by the time everybody notices that your new thing doesn’t work, you’ll be on to building some new thing that doesn’t work either.

Facebook’s a huge success, but it’s not as successful as HTTP. Roy Fielding, one of HTTP’s principal authors, has a success story under his belt that few other humans have achieved in the history of our species.

It’s not all about this one person, of course. The web’s got a deep history. But still. It could be worthwhile to listen to Fielding, when he talks about how his creation works. Since it is, after all, an epoch-defining success.

But Facebook did not choose this option.

Blaming REST For The Non-RESTfulness Of Fake “REST”

Facebook claims “REST” systems deteriorate:

Because of multiple round-trips and over-fetching, applications built in the REST style inevitably end up building ad hoc endpoints that are superficially in the REST style.

It’s hard to make sense of that. It literally does not even function as a sentence.

Here’s a version which does:

Because of multiple round-trips and over-fetching, the developers of applications built in the REST style inevitably end up building ad hoc endpoints that are superficially in the REST style.

However, although it now functions as a sentence, it still doesn’t really function as a coherent thought. Because, according to Facebook’s own initial disclaimer, in the apps that they’re looking at, the entire API was always “superficially in the REST style.”

So they’re first saying that we don’t need to care about the distinctions between APIs which are RESTful, and APIs which are not RESTful, but call themselves RESTful anyway. Then they’re saying that this distinction is actually important after all, but that it “inevitably” disappears “because of multiple round-trips and over-fetching.”

Let’s assume Facebook’s using “inevitably” to mean “often,” the same way some people use “literally” to mean “figuratively.” Either way, multiple round-trips and over-fetching indicate an API which is not RESTful. So that distinction was never really there.

[Ad hoc endpoints] actually couple the data to a particular view which explicitly violates one of REST’s major goals.

Right. Because they aren’t REST.

The Facebook Conspiracy Theory

Mainstream web dev thinking around REST is hopelessly confused. Most devs just never bothered to learn how HTTP really works. So it’s reasonable to assume that a lot of Facebook’s pitiful inaccuracy here is honestly pitiful and honestly inaccurate.

But put that next to React’s attack on the DOM and some of Facebook’s other initiatives. Facebook is trying to embrace and extend web technologies. If you know that phrase, you know another phrase: fear, uncertainty, and doubt. FUD was a strategy pioneered by Microsoft in the personal computer era. It stands for deliberately putting out bullshit ideas in the hopes of confusing people into submission.

So say you’re a little higher up the ladder at Facebook, and you actually understand REST, but you see a relatively junior engineer putting this nonsense up in public where people can read it. Do you stop them, or allow them to continue?

What happens if web devs don’t understand REST, and Facebook convinces them to abandon it for Relay?

Imagine that you’re Facebook, and your desired end game is AOL 2.0, as Jacques Mattheij put it:

The biggest internet players count users as their users, not users in general. Interoperability is a detriment to such plays for dominancy. So there are clear financial incentives to move away from a more open and decentralized internet to one that is much more centralized. Facebook would like its users to see Facebook as ‘the internet’…

If the current trend persists we’re heading straight for AOL 2.0, only now with a slick user interface, a couple more features and more users. I personally had higher hopes for the world wide web when it launched.

On an individual level, reading Facebook’s terrible post, we’re just dealing with web devs who don’t know what they’re talking about. This is not exactly an uncommon phenomenon. But at a corporate level, it’s more insidious. Facebook wants to replace REST with Relay for the same reason they want to replace DOM engines with React Native.

If you control the underlying paradigm, you can leverage that to control the platform. If you control the platform, you can charge rent.

Facebook’s whole business model rests on controlling your ability to communicate with your own social circle. Rent-seeking behavior has always been their hallmark. This is why it was so darkly hilarious when Google killed Google Reader so it could focus all its resources on building a second-rate Facebook clone.

Google’s answer to Facebook’s growing power as a rentier was to give Facebook an entirely new dominion to charge rent against. Who could have built best-of-breed RSS support into the most popular browser in the world? Who owns news discovery today?

But let’s focus on who will own the web tomorrow. Facebook already has huge swaths of the consumer population thinking Facebook is the InternetEven though there are also many countries where people report that they use Facebook, but not the Internet.. They want to have huge swaths of the dev popluation believing the same thing.

It’s just not true. The Internet is TCP/IP; the web is HTTP. Facebook is just Facebook. It’s just another web company, making a ton of money off fundamental backbone technologies which it barely understands.

They reinvent wheels. They make those wheels square. They suffer a desperate, perpetual need for new talent. And it’s easy to understand how sincere that desperation is. All you have to do is read the terrible reasoning which the React team puts on their engineering blog. It’s the coolest team at the most successful company, and they don’t even know how the web works.