Risk And Uncertainty In Estimating Software Projects

Guesstimate is an awesome spreadsheet-like tool for dealing with uncertainty. Trying using it to estimate your next project: you may be surprised at the results.

Estimating the cost and duration of software projects is, apparently, a hard problem. Thus, Hofstadter’s Law:

It always takes longer than you expect, even when you take into account Hofstadter’s Law.

I was reminded of this, and inspired to write this blog post, when I saw an Web app pop up in my Twitter feed. It’s called Guesstimate and it describes itself as “a spreadsheet for things that are uncertain.” There’s even a blog post explaining the idea. It’s a fantastic way to explore the impact even small amounts of uncertainty can have on costs.

People have been trying to figure out how to accurately forecast the cost and duration of software projects for almost as long as we’ve had programmable computers. I’ve put a lot of thought into it myself. At first, it’s a bit embarrassing. Other professions don’t seem to have this problem. Putting together the annual budget or developing an ad campaign aren’t giant mysteries. Why can’t our profession get its collective shit together, right? Eventually, I came to realize that maybe we weren’t to blame. That maybe estimating cost and duration of software projects was harder than it appeared. And maybe the real problem was pretending otherwise.

Wishful Thinking And Make-Believe

In fact, I think we do this a lot in the software business. This is partly wishful thinking and partly a consequence of having a software business in the first place. In business, you need to know how much something costs before taking action, or you risk putting yourself out of business (or getting fired). So we’ve pretended for decades now that we can know in advance how much a given piece of software will cost to build. We do this in spite of the fact that there are now literally decades of evidence to the contrary.

You can make a nice career for yourself if you’re good at this sort of make-believe. We’ve collectively developed techniques to help us, like time-boxing, measuring velocity, and so on. We’re collectively telling our less technical colleagues, Look, I know this is crazy, but I don’t know for sure when we’re going to finish. But, as you can see from these graphs and charts, we’re really trying here. But that’s still a form of denial.

Building Software Is Hard

What we ought to be doing is taking into account that building software is a lot harder than it might seem, especially to less technical people. Consequently, there’s a lot more risk and uncertainty than it might seem. As business people, if we don’t know what a thing will cost, we need admit that, and take it into account. We need to incorporate that risk and uncertainty into our planning. And I think there are three main reasons we don’t do this.

Fractal Complexity

First, we can’t always explain why there is risk and uncertainty. If I had a dollar for every time someone asserted that a given project was basically just a Web site and database–hell, I’ve said it myself. But software projects tend to be fractally complex, which is a fancy of way of saying the devil is in the details. There’s a great Quora post by Michael Wolfe illustrating the concept.

Shoot The Messenger

Second, acknowledging risk and uncertainty is not a popular thing to do. Software projects are often launched with a budget and timeframe already in mind. If you just come out and say, hey, that might not actually be possible, you’d better be ready for a difficult conversation. And you may just find yourself replaced in favor of someone who is still in denial about it. Or, giving them the benefit of the doubt, perhaps they simply assess risk more aggressively (and thus probably incorrectly).

The point is, there’s a strong incentive to be agreeable. A team led by Jessica Kennedy of The Wharton School studied this phenomenon:

Overconfidence engenders high status even after overconfident individuals are exposed as being less competent than they say they are. In a series of experiments, overconfident people suffered no loss of status after groups received clear, objective data about participants’ true performance on a task.

In other words, people will admire you more if you say you can come in on time and under budget, even if you fail to do so, than if you say you’re not sure, and succeed.

Humans Are Bad At Statistics

Third, we lack the training and toolset to measure and manage risk and uncertainty. This is hardly unique to software professionals. Nassim Nicholas Taleb wrote a best-selling book on the subject. Taleb maintains that ignoring risk and uncertainty is practically human nature. We tend to assume things follow a normal distribution, the way things like height or batting averages do. Extreme outliers are unlikely in those cases. There are no ten feet tall humans and no one has hit .400 since 1941. But, in reality, there are lots of things where extreme outliers are more likely. Building software is one of those things, eloquently illustrated by a charming XKCD comic.

These three factors work together to create a negative feedback loop. Since we don’t fully understand and can’t articulate risk and uncertainty, this makes it difficult to incorporate it effectively into our planning. We’re left to, more or less, shrug our shoulders in the face of questions and concerns from colleagues. We don’t want to say something can’t be done, because we don’t know for sure that it can’t. So we try our best and nervously study the trend-line on burn-down charts or talk excitedly about increasing our velocity in the weeks before a big release.

Expected Value

Fortunately, there are well-understood ways to quantify and articulate risk and uncertainty. In fact, businesses use many of them routinely. We just have yet to adapt them to our needs. I first noticed this when I became involved in sales. In a sales forecast, you assign a value and a probability to each opportunity. These are multiplied together to get an expected value. These expected values are then summed to get an aggregate expected value. Of course, the individual expected values are useless, since they will either be worth the assigned value if they close or zero if they don’t. But, together, they give you an accurate forecast. Well, as accurate as the probabilities you assign to them anyway. And this is just one example of the ways that we routinely deal with uncertainty and risk in business planning every day.

An Example

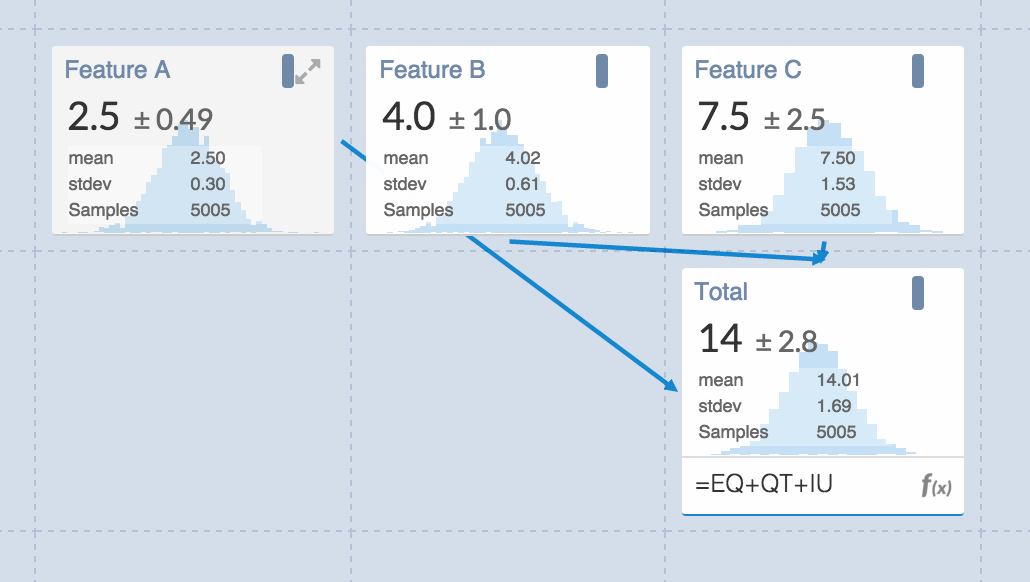

Using Guesstimate, I’ve put together of example of how a similar approach can be applied to estimating the cost of software projects. I’ve estimated the costs of three features, A, B, and C. For each feature, I’ve given a range for the potential cost in days. For example, for feature A, I’ve given the range of 2 to 3 days. The total estimate for these three features comes to 14 days, plus or minus 2.7 days. It’s the plus or minus bit that we’ve been leaving out when we try to estimate costs. This means our range of possible costs ranges from 11.3 to 16.7 days. That’s a variance of over a week on a 2-3 week project!

Planning Poker With Ranges

This is okay for small projects. We’ll literally say, That will take 2-3 weeks and everyone nods their heads, because the stakes are relatively low. But if we had 30-40 features instead of 3, and we were saying 2-3 months instead of weeks, that would start to become an issue. As complexity grows, we’re talking about variances of tens of thousands or even hundreds of thousands of dollars. What’s happening is that the uncertainty for the project as a whole is the sum of the uncertainty associated with each feature or each task required to complete it. That’s why that fractal complexity comes back to bite us later.

Imagine how different our estimates would be if our planning poker sessions took the high and low estimates for each ticket (instead of attempting to reach a consensus), and then plugged these ranges into Guesstimate? They’d look quite different! Of course, I’m hardly the first person to think of this. Among probably many other examples, Joel Spolsky wrote, back in 2007, about using Monte Carlo simulations to produce a probability curve of possible ship dates.

Confidence Levels

But this only addresses half the problem. The other half is that uncertainty in software projects is often non-linear. Whenever I’d see a ticket with an estimate whose granularity was higher than days, I’d assume it was meaningless unless and until we broke that ticket down into smaller ones. The reason is that uncertainty often masks hidden complexity. When we estimate that a given task will take us 1-2 weeks, what we’re often saying is that it will probably take us less than two weeks. What we don’t say is how confident we are, which is often “not very.”

You might expect that we’ve taken care of that by using ranges instead of fixed estimates, but we haven’t, not really. Building software is complex enough that broad ranges often imply low confidence. We’re so accustomed to shoe-horning this complexity into spreadsheets that we don’t account for this. That 1-2 weeks often really means 1-2 weeks with a low confidence level. Maybe we’ve never used the library that we’re counting on to get the task done. Or maybe we’re not sure of the requirements. If we were to stipulate that ranges must have a 90% confidence level or higher, there are cases where we would not be able to provide one at all (or at least not a meaningful one) because there are too many things we don’t know.

The Biggest Source Of Uncertainty

Consequently, when development begins, we often cannot know even the range of possible values for the cost of the project, at least not with much confidence. What we can do, however, is enumerate the uncertainties and the risks. We can further divide these into technical risks and non-technical risks. The technical risks can usually be reduced by further investigation: testing that library, or building a proof-of-concept using that service, and so forth. And this is absolutely a useful and valuable thing to do.

The non-technical risks are often intractable, but that doesn’t mean they’re not worth enumerating. However, the biggest one of these is uncertainty around what we’re actually building. Stakeholders in a project usually believe that there isn’t any such uncertainty, which, of course, becomes part of the problem. Everyone has an idea in their head about what the project is, but often those ideas are different, and sometimes in subtle and nuanced ways.

Specs And Wireframes Are Overrated

Wireframes and other static forms of specifications can help, but software is not static and therefore cannot be comprehensively specified that way. Some uncertainty will remain, except for the most trivial of projects. This is a massive source of fractal complexity. And, basically, it’s the whole ballgame. It’s why the agile manifesto talks states that working software is the best measure of progress. The only really valid, high-fidelity specification for most software is, in fact, the software itself (including test code). That’s not to say specs and wireframes have no value, just that they’re overrated.

Eureka!

When you put all this together, the best you can say is something like I am 25% confident that the cost will be between $150,000 and $250,000 and be done within six months. Which is pretty much a useless statement. Building software involves so much risk and uncertainty that it’s hardly even worth the effort to quantify it. In fact, Peopleware, which may be the most widely-read-and-then-apparently-ignored books about managing software projects ever written, And I’m a member of that club, since I read the book back in the early 90s and apparently entirely forgot about this conclusion. cites a study from way back in 1985 where the most productive teams were those that proceeded without any estimates at all. In a more recent article, Peopleware author Tom DeMarco goes even further. He points out that accurately estimating costs is only useful for projects where the benefit of the project might be less than the cost. In other words, estimation is only valuable for the least valuable projects. Which constitutes a rather clever proof that spending time estimating costs is intrinsically invaluable. I don’t really believe that, per se, but it’s an interesting point.

And so, one day, I just stopped estimating. I gave up. And the moment I did that, my eyes opened.

There is risk and uncertainty everywhere. We’re just so accustomed to it, we don’t notice it. Sure, the marketing department can plan that ad campaign, but they can’t be certain it will actually work. And we’ve already talked about sales forecasts. HR may have a recruiting plan, but they still don’t really know how many developers they’ll be able to hire. The CEO may have approved that annual budget, but can’t guarantee they’ll close that next round of funding. And so on.

I began to wonder, why were we, as software professionals, being held to a different standard?

The Importance Of Trust

I’m not going to answer that question in this blog post. What I will do is tell you the solution to dealing with the problem.

Trust.

Hire good people with a healthy work ethic. People you can trust. Empower them to do their jobs. And then give them the benefit of the doubt. And when you need to give estimates, clearly state that your estimates aren’t any different in nature from a sales forecast, a cash flow projection, or the ROI for an ad campaign. If pressed by colleagues who demand cost certainty from you, just say this:

I trust you to do your job. I’d like you to extend me the same courtesy.